Edge Computing in the Context of Voice Assistants and Beyond

Let’s take a look at some of the technologies behind modern voice user interfaces (VUIs).

Although voice-controlled machines had been occupying the minds of sci-fi writers and researchers for decades, it’s only recently that cloud computing smashed the doors wide open for the voice interface.

Nowadays, cloud platforms are enabling voice assistants like Google Home or Amazon Echo in millions of homes. However, these smart devices are not recording audio and sending it to the cloud continually. An increasing amount of data processing and analysis occurs on the devices themselves.

The role which edge computing plays in this and other processes deserves proper credit.

What Is Edge Computing?

We are currently witnessing an explosion of data, devices, and interactions. Having to send everything to the cloud and back means introducing latency and impairing the performance of devices and data that are far from centralized public clouds or data centers. It’s an unnecessary waste of energy and resources and a cause of privacy problems. A solution can be provided by bringing the computing, storage and networking blocks of the cloud closer to consumers.

This solution is called edge computing or simply ‘edge’ because a portion of an application, its data, or services is taken away from the network’s central node or nodes to an edge. (An ‘edge’ of the network is an extreme which contacts the physical world or end-users.) The method thus brings data processing as close as possible to the source of the data, such as smart home devices which receive data via sensors. A device that produces or collects data is often called an ‘edge device.’ The data is processed by the device itself or by a local computer or server, so no round-trip to data centers or clouds is required. Consequently, both the speed of data transport and performance of devices and applications on the edge can be improved.

A typical modern voice-controlled device uses ‘wake-word’ detection. A small portion of the edge computing resources are used to process microphone signals. The rest of the system remains idle, which improves power-efficiency and helps extend usage time in portable battery-operated gadgets. Once the wake-word is detected, the rest of the system wakes up to support functions requiring more computing power, such as audio capture, compression, transmission, language processing, voice tracking, etc.

A few more terms to be familiar with follow. ‘Fog computing’ was introduced by Cisco in 2014 as a way to bring cloud computing capabilities to the edge. Currently, it’s regarded as the standard that defines how the edge method should work.

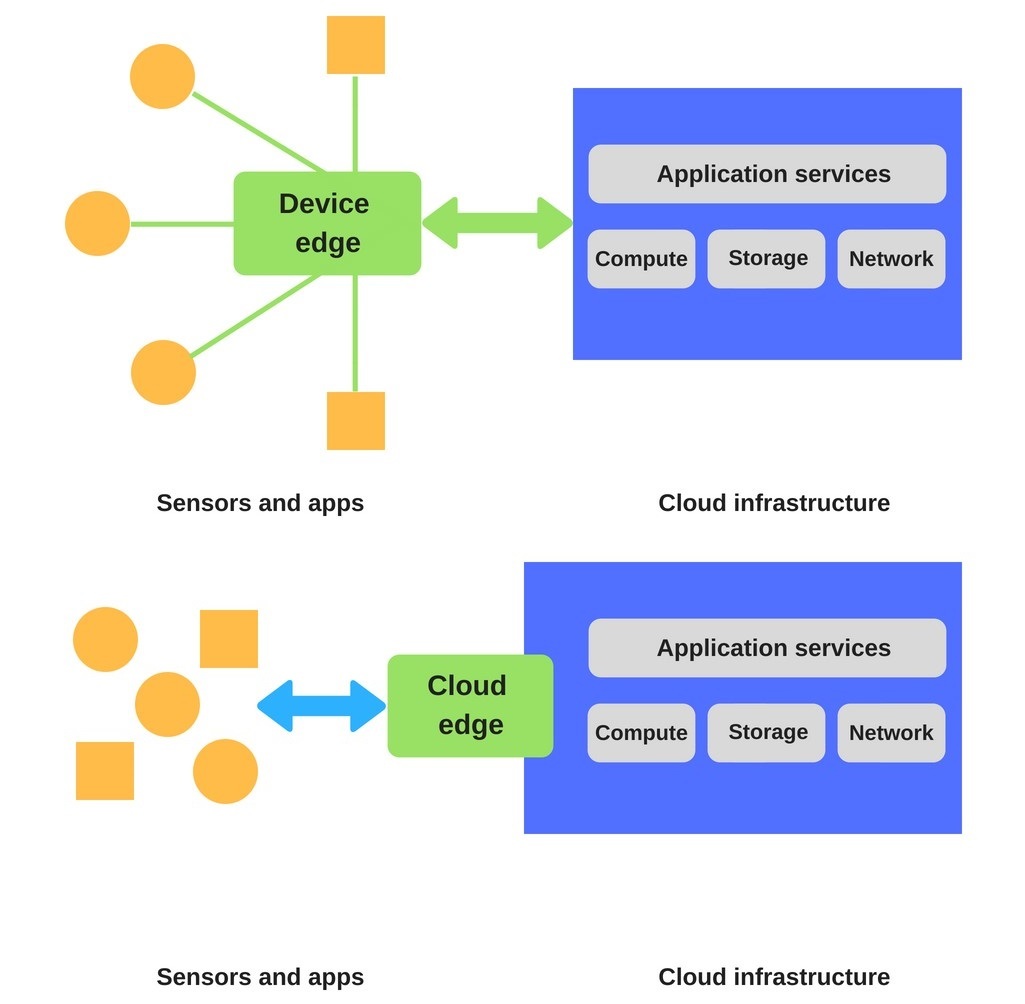

One way of bringing public cloud capabilities to the edge involves a custom software stack that emulates the cloud services running on existing hardware. AWS Green Grass and Microsoft Azure IoT Edge are examples of such ‘device edge’ architecture software.

The second model includes a ‘cloud edge’ owned and maintained by a public cloud provider. Content Delivery Networks (CDN) are classic examples of this arrangement: CDNs deal with storage to provide content, and the cloud edge layer extends the scenario to include compute and network services.

The Edge in Action

The use of edge computing in applications or general functionalities can reduce the volume of the data to move, the consequent traffic, and the distance the data must travel. This helps shrink latency, cut transmission costs, and increase the speed and quality of services. Security and privacy are also theoretically better: the less data is in a corporate data center or cloud environment, the less vulnerable it is if an environment is compromised.

Internet of Things (IoT) provides many use cases for edge and fog computing architecture. Multiple sensors installed on machines, components or devices can produce terabytes of data that should be processed quickly. Edge computing allows streamlining the flow of traffic from IoT edge devices.

For example, a home security’s ability to analyze events on the device reduces the amount of data sent to the cloud exclusively to critical alerts. This improves the performance, power efficiency, and cost-effectiveness of the whole system. The analysis of real-time performance data from sensors on aircraft engines literally on the fly helps address problems proactively without grounding the aircraft.

Efficient massive data processing near the source of the data reduces Internet bandwidth usage. This both eliminates costs and ensures that applications can be used effectively in remote locations. Edge computing is essential if the resources can’t be continuously connected to a network or with spotty Internet or mobile networks. Autonomous vehicles, implanted medical devices, or fields of highly distributed sensors are just several use cases. Processing data without ever putting it into a public cloud adds security for sensitive data.

To realize the value of massive amounts of data produced by wind turbines, pumps, industrial controllers, and other equipment and sensors, both edge and cloud computing should be utilized if possible. Nevertheless, the edge is likely to dominate when low latency is required, or bandwidth is limited, e.g., in mines or oil platforms.

Real-time applications such as connected cars or smart cities are intolerant to milliseconds of latency and can be very sensitive to latency variations. Such use cases are sure to benefit from edge computing. The end-users of augmented reality (AR), virtual reality (VR), and gaming apps will also enjoy immersive experiences that can be delivered by the edge.

AR for remote repair and telemedicine, IoT devices for capturing utility data, inventory, supply chain and transportation solutions, smart cities, smart roads, and remote security applications will remain reliant on mobile networks. Hence, they all can take advantage of the edge’s ability to move workloads closer to the end-user.

Mining operations, transportation, wind farms, solar power plants, and even stores still have limited, unreliable or unpredictable connectivity. Edge computing neatly supports such environments by allowing sites to remain semi-autonomous and functional when needed or when the network connectivity is not available.

What’s Next?

With advances in machine learning, voice recognition, and the IoT technology, voice user interfaces are rapidly becoming smarter. Simultaneously, cloud computing is going through a fundamental shift. Edge and fog computing enable data processing without latency. Real-time analysis of field data allows applications and devices to respond almost instantaneously. All that promises considerable benefits for the manufacturing, healthcare, telecommunications, finance, and other industries.

Edge computing and AI will be steadily becoming more powerful while more VUIs will be added to virtually anything. The ecosystem will continue relying on the power of the cloud services, e.g., to process data for machine learning purposes. However, the majority of data processing will be taking place at the level of smart devices.

Voice-controlled applications will be growing in number, sophistication, and affordability until an easy-to-use, accurate, and reliable voice interface becomes the primary way to interact with machines. Meanwhile, more enterprises are looking at voice applications as an important new way of connecting with consumers. Others are considering the edge for massive data volumes being produced by numerous devices and users’ interactions.

Content created by our partner, Onix-systems.

Home

Home