How Web Application Performance Testing Benefits Your Business

Your web application performance directly affects your online business, for example, when you are unable to process an unprecedented number of orders during the Christmas season. Performance, especially the perceived load time, is also key to good user experience. 53% of people would abandon a page that loads for longer than 3 seconds, and one-second lag might cost online stores millions in sales per year. Gartner once estimated the average cost of network downtime at $5,600 per minute. The darkest scenario would be for your website to crash on Black Friday.

Unfortunately, that may happen if you are not prepared to handle an increased number of concurrent users. Ecommerce websites invest heavily in advertising campaigns, but higher traffic that marketing delivers sometimes catches them off guard. Timely web application performance testing, particularly load testing, will help you preclude detrimental performance issues and ensure continuous optimal performance under varying conditions.

Entrepreneurs needn’t know exactly how to load test a website, but it’s useful to have a basic understanding of procedures that are vital for your business and for which you pay. From this post, you can learn what performance testing is, when it is done, and how.

Definition and Types of Web Application Performance Testing

Software performance testing, popularly called ‘perf testing,’ is the general name for tests that determine whether a component or system meets specified requirements while running under specific circumstances. While functional testing focuses on functions, perf testing determines the readiness of a website, mobile app, web service, server, database, or network. The tests examine a software’s speed, responsiveness, stability, scalability, reliability, and resource usage.

In the context of web applications, perf testing entails using software tools to simulate how an app runs under an expected workload and measuring according to benchmarks and standards. Quantitative perf testing looks at metrics, such as the number of virtual users, hits per second, errors per second, response time, latency, and bytes per second. Qualitative testing is concerned with scalability, stability, and interoperability. The goal is to mitigate performance bottlenecks before actual users face any issue during a load surge.

During performance testing, software developers are looking for major performance symptoms and issues:

- Poor response time — Response time, the chief metric for load testing, is the amount of time between the user inputting data and the application’s output. Naturally, it should be as short as possible. Once the system has started extending the response time, it has reached its maximum operating or speed capacity. If the peak response time is much longer than the app’s average, there is likely a problem.

- Long load times — The initial time it takes an application to start should generally be kept to a minimum. According to Maile Ohye from Google, it shouldn’t exceed 2 seconds for ecommerce websites.

- Long wait time/average latency — This metric refers to how long a request spends in a queue before it is processed, or how long it takes to receive the first byte after a request is sent.

- Bottlenecking — A bottleneck is an obstruction in a system that occurs when either faulty code or hardware issues under certain loads cause a decrease of throughput, i.e. the amount of bandwidth used during performance testing which is measured by kilobytes per second. The data flow is interrupted or halted, causing a slowdown. This may happen, for example, if servers are not equipped to handle a sudden influx of traffic. Some common performance bottlenecks include the CPU utilization (amount of time required for the central processing unit to process requests), memory utilization (amount of memory needed to process a request), network utilization, disk usage, and operating system (OS) limitations.

- Poor scalability — Scalability is the system’s ability to expand rapidly so as to sustain increasing loads, e.g., by automatically deploying additional resources. Poor scalability may cause delayed results, high error rate (ratio of errors to requests), OS limitations, or poor network configuration, as well as affect the disk usage and CPU usage.

- Software configuration issues — Sometimes, settings are not set at an adequate level to sustain the workload.

- Insufficient hardware resources — Perf testing may reveal physical memory constraints or low-performing CPUs.

Performance tests help identify these performance issues, bugs, and errors, after which developers can decide what they need to do to ensure the system will perform well under the expected workload. Without proper testing, you are likely to face slowdowns, inconsistencies across different OS, and poor usability when the number of online customers grows.

A thorough analysis of a website’s speed and overall performance requires using different types of performance tests.

All of the following tests involve monitoring a system’s behavior under simulated potential production conditions.

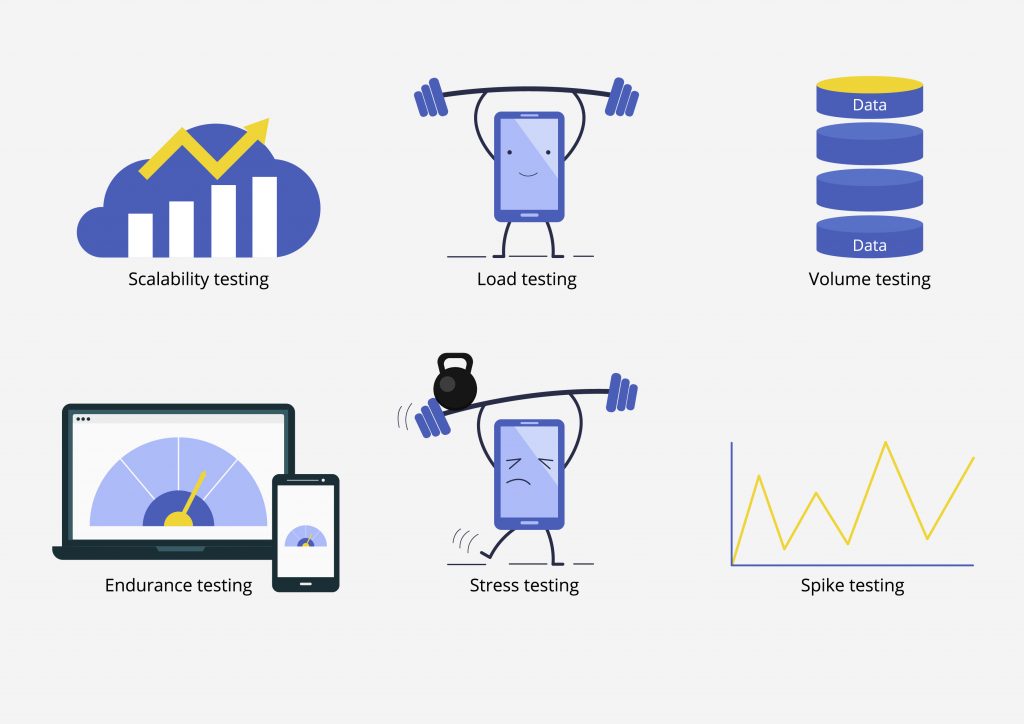

Load testing

Load testing evaluates the application’s ability to perform under real-life load conditions when the workload increases, e.g., under a massive number of concurrent virtual users performing transactions. They may be opening a landing page, signing up and signing in, sending files, generating reports, etc., over some time. Load tests would look at how their actions affect the application’s response time and measure the staying power.

What is load test? Simply put, QA automation engineers subject the software to a specific expected load or increase the load to see at what point significant performance degradation will occur. To simulate normal and anticipated heavy load, a typical load-testing tool records the user actions using a script recorder. Using these scripts, a load test engine simulates, for example, the number of users that can complete a given user journey per minute within the expected mix of total journeys. The engineers can increase the load to the point of breaking or crashing to see what can be handled. In the process, they monitor and collect hardware and software statistics, such as the response time, CPU, memory, disk input/output of the physical servers, etc.

The testing usually identifies:

- the app’s maximum operating capacity

- sustainability with respect to peak user load

- the element that causes degradation

- number of users that the app can handle simultaneously

- the scale of the app required in terms of hardware, network capacity, etc., to allow more users

- whether the hosting infrastructure is sufficient to run the application

- any server configuration issues

- network delay between the client and the server

- hardware limitation issues, such as CPU maximization, memory limitations, network bottleneck, etc.

The following types of performance tests may be regarded as variations of load testing.

Stress testing

When the QA staff means to test a web application performance outside the boundaries of normal working conditions, they raise the load beyond normal usage patterns. This is known as stress testing. It evaluates the extent to which a system keeps working under intense loads or with some of its hardware or software compromised. Stress testing can be conducted with load testing tools by defining a test case with a very high number of concurrent virtual users. For example, a test may determine how many concurrent users or transactions the application can handle before crashing. The goal is to reveal the maximum point after which the system fails or breaks and how it recovers from failure: whether the KPIs like throughput and response time are the same as before, or whether memory leaks, security issues, or data corruption occurred.

Endurance testing

Endurance tests, also called soak tests, help make sure the software can handle the expected normal load over an extended period of time. The QA staff may also check the system’s sustainability over time with a slow ramp-up. The goal is to reveal any memory leaks or other speed-related problems that may arise during product development.

Spike testing

A spike test checks the software’s reaction by simulating rapid and repeated workload increases. The workload should be beyond normal expectations for short intervals. A sudden increase in the number of virtual users is an example.

Volume testing

Volume or flood tests focus on the overall web application performance under varying database volumes. A database is ‘flooded’ with projected large amounts of data to monitor the system’s behavior.

Scalability testing

Scalability tests assess how well the software responds to increasing workloads. This can be determined by gradually raising the user load or data volume while monitoring the effects on the system performance. Alternatively, the QA staff may change the resources, such as CPUs and memory, while the workload stays the same. Such testing helps plan capacity additions to the software system.

The following chapter explains in general terms how to do load testing and other performance tests.

Performance Testing Best Practices

To ensure the best user experience, load testing should be done regularly. There are also several ‘best times’ for web application performance testing:

- When the product is nearly complete (all major features developed);

- Once the application becomes functionally stable;

- After a code update or installation of new hardware or software;

- For individual units or modules of the product (e.g., to test the speed of interaction between microservices);

- Once the website grows by a given amount;

- Before events when you expect the number of online visitors to grow;

- After a negative user satisfaction test or low scoring customer sentiment survey;

- If you noticed that the site has started to struggle in a way not seen previously.

Many organizations that adhere to the Waterfall methodology perform load testing after the software development phase or every time a version is released. Agile teams should be load testing web applications continuously. Stress tests should be performed every once in a while, especially before major events like Black Friday, ticket selling for a popular concert, or the elections. This will ensure you know the system’s endurance capabilities and are prepared for unexpected traffic spikes or fixing any performance issues that may occur. Mission-critical applications, such as space launch programs or life-saving medical equipment, should be tested to ensure that they run without deviations for a long time.

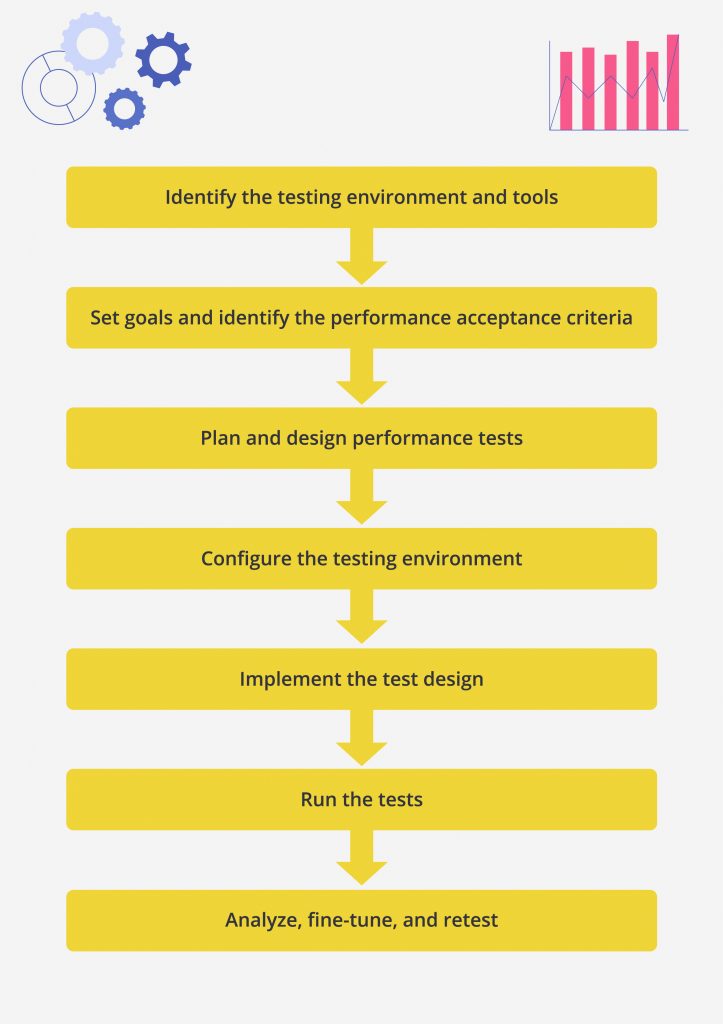

A generic perf testing process includes the following steps:

1. Identify the testing environment and tools.

A testing environment is where software, hardware, and networks are set up to execute performance tests. Developers need to identify the physical test environment and production environment, the tools available, and any performance testing challenges early.

Testing in the actual production system or its replica is one of the performance testing best practices. If a live site is tested, many organizations choose to run tests outside of peak hours to reduce the risk of trouble for real users. If it is impossible to conduct performance testing in the exact production environment, it may be a subset of the production system with fewer servers of the same or lower specification. The team should at least try to match the hardware components, OS and settings, network, databases, software specifications, and other applications used on the production system. At Alternative-spaces, load testing is conducted both on the live and beta versions of a project.

Perf testing will be more successful if:

- The creation of the performance testing environment involves both developers and QA engineers.

- The team develops a test environment that takes into account as much user activity as possible.

- The performance testing environment is isolated from the environment used for quality assurance testing.

For an accurate picture of web performance, it’s essential to simulate production conditions as much as possible for load and stress tests. Sometimes, it means throwing millions of requests at the app. Instead of doing it manually, most businesses nowadays are leveraging load testing tools or services. Licensed or enterprise tools usually come with capture/playback facility and can simulate extraordinary numbers of users. The support of many protocols means that load testing can be performed on Enterprise Resource Planning (ERP) systems, Customer Relationship Management (CRM) software, streaming media, and other apps. Almost all licensed tools have a free trial version, but there are free open source tools as well. Below is a list of popular testing tools that can be divided into two groups:

1) Tools for understanding server-side performance, such as:

- Apache JMeter — JMeter is arguably the most popular open source load testing tool for web and application servers. This Java platform application helps to test performance on files, servlets, Java objects, FTP servers, databases and queries. Testers use JMeter to simulate a heavy load on them to test their strength or analyze overall performance under different load types, to test their server/script/object behavior under a heavy concurrent load, and to make a graphical analysis of performance.

- Locust.io — This open source load testing framework works with Python code. It enables complex transactions and reports running load tests distributed over multiple machines so that it can simulate millions of concurrent users.

- Siege— This open source load testing utility supports HTTP and HTTPS protocols, the GET and POST directives, basic authentication, cookies, and transaction logging. The utility allows to ‘besiege’ a web server with a user-defined number of simulated clients.

2) Tools for understanding the performance of the client-side, for example:

- HP LoadRunner — This is arguably the most commonly used licensed load testing tool on the market today. LoadRunner features a virtual user generator that simulates the actions of human users. It puts applications under normal and peak load conditions by simulating hundreds of thousands of such users.

- LoadNinja– This cloud-based load testing tool allows recording, instantly playing back, and running comprehensive load tests in real browsers at scale. Testers can increase test coverage and cut testing time by over 60%.

- NeoLoad — It is the only load-test enterprise-grade platform that covers cloud-ready apps, IoT, mobile apps, and more. It is designed for DevOps, seamlessly integrates into existing Continuous Delivery pipelines, and facilitates much faster testing across the full Agile software development lifecycle.

- WebLOAD— A proven LoadRunner replacement for load and performance testing, this cloud-based service offers a robust toolset tailored to enterprise applications that have thousands of users.

- WebPagetest.org — The free tool allows testing a web page in various real browsers from any location, over any network condition.

The choice of tools for testing depends on many factors, such as the protocols supported, license cost, hardware requirements, or platform support.

2. Set goals and identify the performance acceptance criteria.

Before performance testing, we always discuss with the customer their requirements and business needs. It’s essential to determine whether the system satisfies the customers’ needs. We also analyze the system and prepare a preliminary list of recommendations for load testing and cases that can overload the system, e.g., image uploads, file generation, or sending out large amounts of data.

The QA staff should test the most frequent flows for your users, and understand which metrics matter most to the users and the web app’s performance both in the browser and on the server. They need to know what normal conditions and peak conditions are, and should establish some achievable benchmarks. The resources available always limit a website or app’s performance, so it’s important to know the limits to set achievable goals.

Since throughput is the best indicator of a website’s capacity, it’s good to start with setting a throughput goal. The QA staff also set goals and acceptable limits on response time and identify project success criteria. With performance testing, there is neither true nor false, but rather thresholds. For instance, testers might decide that a response should have a specified average time and a certain unacceptable maximum time. They should be able to set performance criteria and goals in addition to project specifications.

Typically, testers see how the app performs as-is, and if the run is acceptable, the results become the initial baselines. They also need to analyze available performance data to develop the parameters of the load required for the tests. To contextualize the future test results, it is also useful to measure performance beforehand. For instance, testers may monitor user journeys 24/7 for several weeks, measuring the time to complete a journey every 5 minutes. Then they keep this data around to judge whether or not performance regresses with time. Measurements and test data from similar applications help set performance goals as well.

3. Plan and design performance tests.

The team needs to develop a clear testing plan. It is necessary to simulate a variety of end-users, plan performance test data, and outline what metrics will be gathered. The testers identify key scenarios to test for all use cases and some specific situations the application is likely to encounter. To understand why performance suffers at a given level of users, they need to know what those users were doing. It is also important that different user journeys should be represented within the test proportionally to the number of those journeys in different periods, reflecting possible traffic peaks.

A performance test scenario is a combination of machines (agents), scripts, and virtual users that run during a testing session. Having identified realistic scenarios that take into account user variability, test data, and target metrics, the QA staff create one or two models.

A mixture of tests is always useful. It gives a better understanding of the limits of the system under different conditions and helps plan the necessary redundancy in the system.

4. Configure the testing environment.

The team arranges the testing tools and prepares a dedicated testing environment that closely reflects real-world conditions, and automates deployment to this testing environment. For example, if an online store attracts a lot of international visitors, they need a corresponding infrastructure and load testing scripts that contain tasks performed by virtual users. Then, they need to automate the kick-off of the performance tests and the recording of the results.

5. Implement the test design.

The team develops the performance tests according to the test design. Before running the scenario, they set up the scenario configuration and scheduling. A large amount of unique data should be ready in the data pool, and the number of users should be established for each scenario or script.

6. Run the tests.

The team executes and monitors the tests and collects the generated data. A scenario can be observed using run-time transaction, system resource, web resource, network delay, and other monitors. Teams usually run at least two rounds of tests. The first round allows for the opportunity to identify any problem areas. Then, the team repeats by running the tests with the same parameters under the same conditions to ensure that performance is consistent, or experiments with different parameters. Depending on the results, the team may need more testing iterations.

7. Analyze, fine-tune, and retest.

Once the web app’s performance has been quantified with data, the team may analyze the test results using graphs and reports generated during scenario execution. Now they can share the findings and start resolving any issues identified. They fine-tune the app, and after fixing the problems, rerun the tests using the same and different parameters to measure improvement.

Usually, we run a series of load, stress, endurance, peak, and volume tests. Once perf testing is completed, we prepare a detailed report with the test results, performance charts, list of errors (if any), and recommendations for the customer on what should be improved to solve the problems.

Here is a recent example of Alternative-spaces load testing web applications. Adoric is a SaaS tool for managing digital marketing campaigns. The task was to load test the website’s API. The customer wanted to see how the server worked if the load increased over a short time.

Before API testing, we collect information about the routes that need to be load-tested and prepare API documentation. It consists of a list of requests that we will send during the testing process and responses we would expect to receive in return. We loaded the API by gradually adding users. Once we reached the desired number of users, the test went into the phase of supporting a constant load level. Then, we began to gradually reduce the load until the number of users dwindled to a minimum.

During the test, the API was working stably with a load of 200 users per second and less. When the number exceeded 250 users per second, we noticed increased latency. The average number per minute during the test was 1,161 users.

Based on the test results, the recommended load for the existing architecture characteristics could be 150 users per second or 9,000/minute. If the load didn’t exceed 150 users per second, the server could sustain 13 million users for a period of 24 hours.

When the customer received the report, they decided to change the infrastructure to improve the API’s performance. After an update, we conducted another round of load testing, and the average load and response times decreased. As a result, currently, the server can process requests coming from 2,000 users per second or 120,000 users per minute, while the response time has halved. The latency starts growing if the website has 2,200 visitors per second. The critical load for the current architecture is 2,500 users per second. If there is a stable load of 2,000/second, the server will be able to withstand 172 million users over 24 hours.

Performance testing best practices always start with ‘test early, and test often.’ The team should start anticipating and solving performance issues early in the application’s life cycle. The earlier that perf testing begins, the easier it will be to address any arising problems.

It’s best to run the designed load tests regularly, benchmark against the established baselines, and conduct in-depth ad hoc tests that relate to significant launches, marketing campaigns, sales, or expected seasonal peaks. When testing before a significant event, it’s crucial to allocate adequate time for fixing any issues discovered and re-testing.

It’s vital to evaluate both the test results and the evolving business needs. Any information you can collect about site visitors can help you identify ways to improve the user experience because it gives you the parameters to test against. When a website or app grows, changes should be made to accommodate a more extended user base. If it fails to be tested, the business might suffer from its own growth. If competitors achieve faster load times or more responsive software, the company should adjust its strategy accordingly as well.

Successful performance testing consists of repeated and smaller tests. Databases, servers, and services constituting an app should be tested separately and together.

Summing up the Benefits of Software Performance Testing

A software’s consistent level of performance comes with many benefits for an online business. Performance should be considered as a crucial feature of a software product, and its testing should be an ongoing process, part of the overall strategy.

Most performance issues revolve around speed. Testing a website or application’s performance allows to improve it and overall performance, which leads to improved user experience, reduced bounce rates, higher conversions, increased customer satisfaction, and boosted sales and revenue. Using different types of performance tests, you can be aware of any potential problems and can plan how to deal with them in advance. Here are other positive results of testing your website’s performance:

- A well-performing website, which had any bottlenecks discovered and issues fixed before it went into production, will receive more traffic and gain more trust from users.

- If a website’s response time is kept short even as it scales up to a broader audience, one-time customers will be more willing to return to this website.

- Timely tests help ensure that your website is prepared for the planned marketing campaigns.

- Load testing helps identify the maximum operating capacity and bottlenecks of the system, improving its scalability and stability and reducing the risk of the system’s costly downtime.

- Knowing in advance what problems may pop up and when, you can take steps to prevent this from occurring at a crucial time, removing the risk of user dissatisfaction, loss of sales, and brand damage.

- Timely load testing helps determine whether the system needs to be fine-tuned or modification of hardware and software is required, or other infrastructure needs as the system scales upward, and accommodates complementary strategies for performance management, decreasing failure cost.

- Stress and load test data can help you decide how to best use your resources to accommodate more traffic. The information is helpful when you model and forecast capacity needs, as well as in goal setting, prioritization, strategic planning, and budgeting decisions.

- Performance testing gives you confidence in the system’s reliability and performance and helps protect investments against product failure.

Improved customer satisfaction, loyalty, and retention usually more than make up for any costs of load testing web applications.

Content created by our partner, Onix-systems.

Home

Home